AI Chaos in the C-Suite: Trump's Fighter-Jet Video Sparks Multi-Million Dollar Crisis

According to new reports on October 21, 2025, musician Kenny Loggins has demanded the immediate removal of his song "Danger Zone" from an AI-generated video posted by Donald Trump on Truth Social. The video, which depicts an AI-generated Trump as "King Trump" flying a jet and dumping a brown substance on "No Kings" protesters, was posted over the weekend, sparking widespread controversy and a public statement from Loggins that the use was unauthorized and violated his personal ethics against divisive political content.

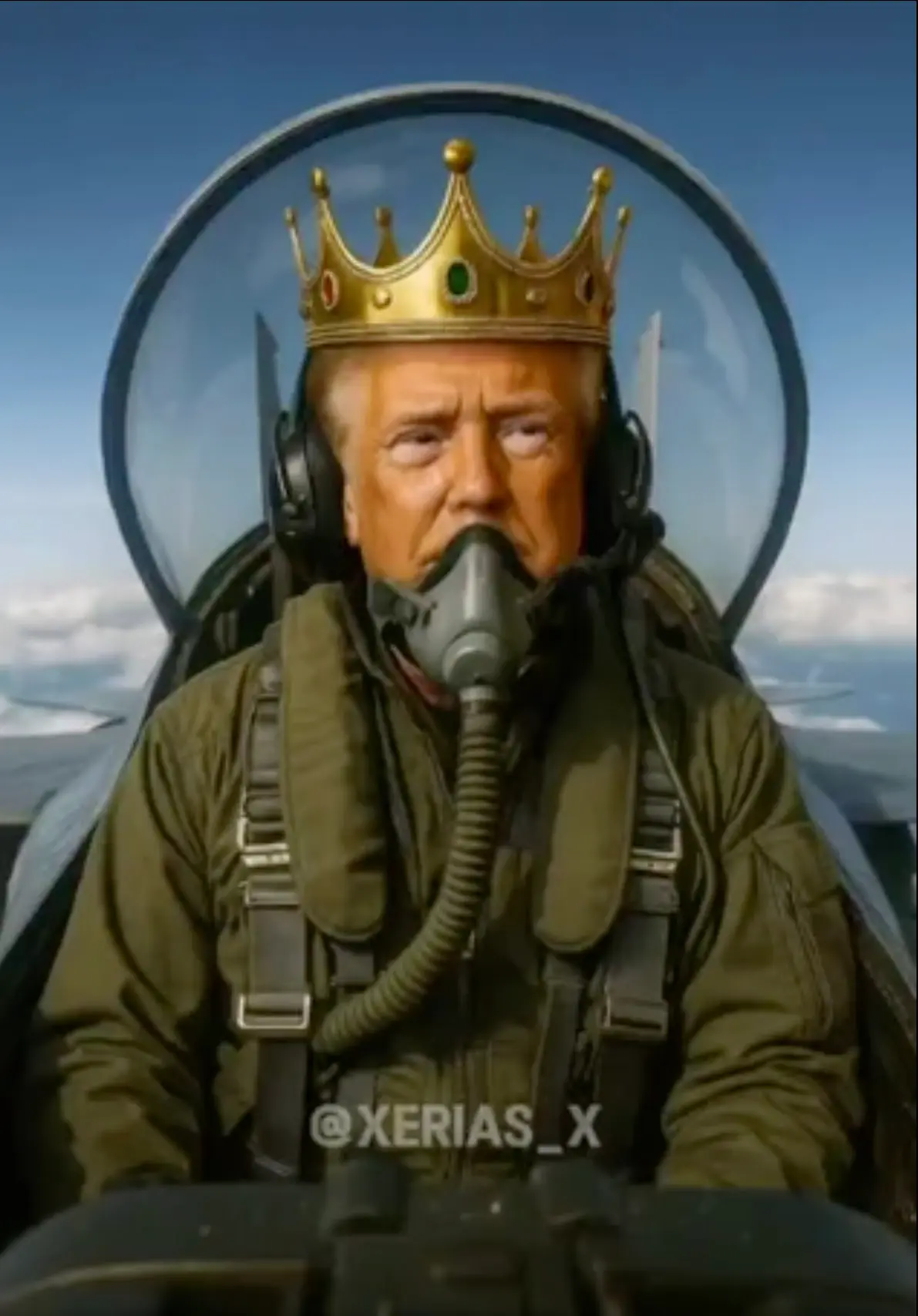

When former President Donald Trump dropped a 19-second, AI-generated video on Truth Social this weekend—portraying himself as a fighter pilot wearing a gold crown and dropping sewage on “No Kings” protesters over New York City—it was far more than a political jab.

For corporate boards, investors, and technology platforms globally, the crude satire has become a high-stakes, real-world case study in the unfolding financial and legal disasters of unregulated AI-generated media. This isn't just viral content; it's a multi-million dollar liability in the making that every CEO must analyze immediately.

The Viral Stunt That Threatens the Balance Sheet

Set to the iconic movie anthem Kenny Loggins' "Danger Zone," the clip shows a crowned "King Trump" soaring over Manhattan, unloading brown sludge onto crowds of anti-Trump demonstrators who had gathered for the nationwide "No Kings" protests. The video audaciously incorporated unauthorized footage, including the likeness of prominent Democratic influencer Harry Sisson, who was seen getting covered in the digital sewage.

The backlash was instant and decisive. Loggins’ representatives formally demanded the immediate removal of the song, citing unauthorized use of the copyrighted track, a clear sign that creators and rights-holders are ready to fight back against AI misuse. The brazen video post, which House Speaker Mike Johnson defended as "satire," highlights a shocking disregard for intellectual property and personal likenesses, ironically reinforcing the very authoritarian themes the protests were decrying.

The Immediate Financial and Legal Alarm

This controversy has ripped the cover off three critical risk zones that now define governance in the AI era. For corporate leaders and investors, the key takeaways are not about politics but about risk management and the potential for explosive costs.

- Massive Legal Exposure: The video combines copyrighted music, real-world likenesses, and AI synthesis—a perfect legal storm that risks claims of copyright infringement, defamation, and the unauthorized commercial use of a person's image. Entities like Truth Social or affiliated corporate partners that profit from the distribution of this content could be directly exposed to costly litigation.

- Reputation and Brand Safety: Firms tied to platforms that host or amplify such politically charged and manipulated deepfakes face an immediate crisis of public trust. Advertiser confidence can plummet overnight, directly impacting platform revenues and stock value. The cost of brand-repair in a deepfake scandal can be astronomical.

- Escalating Investor Risk: Modern investment, especially through ESG (Environmental, Social, and Governance) frameworks, now scrutinizes a firm's AI governance strategy. Failure to control manipulative content is a governance failure that can trigger regulatory penalties and significant market valuation discounts.

AI Deepfakes Slam into New Federal Law

Adding unprecedented legal pressure is the TAKE IT DOWN Act, a new federal law signed by President Trump himself in May 2025. While the Act specifically targets non-consensual intimate or manipulated imagery, the legislative movement signals a clear and growing appetite for broader federal regulation of AI content. Legal experts are clear: platform immunity is waning.

“Senior executives must ask: Is our content-governance strategy keeping pace with regulatory exposure?” says Deborah Marshall, a leading technology and media-law partner at Hanson & Co. "The risk doesn’t just sit with creators—it extends to platforms, advertisers, and corporate partners who profit from distribution.”

The video illustrates how easily a satirical post can breach multiple legal boundaries, setting a dangerous precedent for content platforms. Industry analysts estimate that fines for deepfake-related non-compliance could hit $50,000 per violation, with private lawsuits adding millions more in liability.

The Boardroom Imperative: Core Strategy, Not Crisis Management

Trump’s highly-publicized AI fighter-jet video is no longer just political theater; it’s an urgent boardroom case study. In a digital economy where AI tools are consumer-grade and virality cycles are measured in minutes, the governance gap between content creation and accountability is dangerously wide.

Every CEO must immediately implement or aggressively upgrade the following core strategies:

- AI Content Policy: Establish explicit, zero-tolerance internal guidelines for the creation, sharing, and especially the moderation of all synthetic or manipulated assets.

- Copyright Verification Systems: Implement rigorous, real-time protocols to ensure all music, imagery, and likenesses used in content are fully licensed before being posted, closing the door on costly claims like the one filed by Loggins.

- Crisis Response Frameworks: Develop rapid-action plans for immediate removal, public apology, or correction when infringing deepfakes appear on company platforms, prioritizing speed to mitigate reputational damage.

- Investor Disclosure: Integrate AI governance risks into all risk reporting and ESG disclosures, treating content liability as a material financial risk.

What started as an attention-grabbing, 19-second clip has evolved into a cautionary moment for global business. It demonstrates that AI imagery, once dismissed as mere parody, is now a major frontier for copyright, defamation, and securities-level risk that demands immediate strategic attention.

Donald Trump Fighter Jet AI Video FAQ's

1. What is the main legal basis for Kenny Loggins' demand to remove the song?

The primary legal issue is copyright infringement of the musical recording. Loggins' statement refers to the video's use as "unauthorized use of my performance of 'Danger Zone'." This implies that Donald Trump or the original video creator did not obtain the necessary license (a synchronization license for the video and a master use license for the sound recording) from the copyright holders, who are typically the music publisher and the record label. The artist's objection is both legal (lack of permission) and ethical/political (association with a divisive message).

2. Why did Kenny Loggins specifically object to his song being used in this political video?

Loggins stated that he was not asked for permission and would have denied it because he opposes the video's divisive message. In his public statement, he wrote, "I can't imagine why anybody would want their music used or associated with something created with the sole purpose of dividing us." He emphasized that he believes music should be a way of "celebrating and uniting each and every one of us," and the video—which depicts a figure labeled "King Trump" attacking "No Kings" protesters—directly contradicts his values.

3. Does the recent "Take It Down Act" apply to this type of political AI deepfake video?

No, the "Take It Down Act" does not apply to this specific political video. The federal "Tools to Address Known Exploitation by Immobilizing Technological Deepfakes on Websites and Networks Act" (TAKE IT DOWN Act), signed into law by President Trump in May 2025, is narrowly focused on addressing Non-Consensual Intimate Imagery (NCII), often called "deepfake revenge porn." The law specifically criminalizes the publication and requires the removal of sexually explicit deepfakes or authentic intimate images shared without consent, and it does not cover non-sexual political parodies or controversial content like the "Danger Zone" video.