The Future of Robotics: How MIT's New AI Model is Transforming Robot Learning

In an era where artificial intelligence (AI) continues to redefine what machines can achieve, a recent breakthrough from the Massachusetts Institute of Technology (MIT) is pushing the boundaries of robotic intelligence. By shifting away from traditional data training methods, MIT’s researchers have set their sights on mimicking the techniques behind large language models (LLMs) like GPT-4. This novel approach promises to revolutionize how robots learn, adapt, and interact with the world around them.

MIT's Legacy of Innovation

MIT is no stranger to pioneering advancements that change the face of technology and engineering. As one of the world’s premier research institutions, MIT has consistently been at the forefront of robotics, artificial intelligence, and machine learning. The institute’s robust ecosystem includes the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL), where some of the most significant breakthroughs in robotics and AI have originated.

Home to brilliant minds and a culture of interdisciplinary collaboration, MIT's CSAIL has laid the foundation for various projects, from self-driving cars to humanoid robots capable of learning social cues. The introduction of Heterogeneous Pretrained Transformers (HPT) is yet another milestone that showcases MIT’s continuous commitment to reshaping the future.

Rethinking Robotic Training: From Precision to Scale

Traditionally, training robots has relied on imitation learning, where robots observe and mimic human actions. While effective for basic tasks, this method struggles when conditions change—such as when a room’s lighting shifts or new obstacles appear. The robots’ inability to adapt comes down to a simple fact: they lack sufficient data to generalize effectively in varied environments.

MIT’s new research aims to overcome this limitation by incorporating strategies inspired by LLMs. In the realm of AI, large language models have achieved remarkable success by being exposed to massive and varied datasets, enabling them to understand and generate human-like text. Inspired by these models' capacity for contextual understanding and adaptability, the MIT team set out to bring this principle to robotics.

How HPT Works: A Blueprint for Universal Learning

At the heart of this innovation is the Heterogeneous Pretrained Transformers (HPT) architecture. Unlike traditional robotic training systems that rely on narrowly focused data sets, HPT draws on a diverse array of sensor inputs. These include visual data from cameras, distance measurements from lidar, tactile information, and more. The heterogeneity of the data allows the model to learn about the physical world in a way that mimics human perception, where multiple senses contribute to decision-making.

Lirui Wang, lead author of the groundbreaking paper, elaborates on this: “In the language domain, the data are all just sentences. In robotics, given all the heterogeneity in the data, if you want to pretrain in a similar manner, we need a different architecture.” The HPT architecture is specifically designed to process this wide array of sensory data through a transformer, which excels at identifying patterns and relationships in large datasets.

The larger the transformer, the better its capacity to synthesize complex information. The outcome is an AI system that can perform across a variety of settings, effectively learning and adapting to new environments without the need for additional training.

A Glimpse Into the Future: Universal Robot Brains

Imagine a world where roboticists or even consumers could download a pre-trained "universal robot brain" capable of understanding a wide range of tasks right out of the box. This vision, articulated by Carnegie Mellon University (CMU) associate professor David Held, underscores the ambitious potential of this research.

“Our dream is to have a universal robot brain that you could download and use for your robot without any training at all,” Held says. This universal brain would be to robotics what LLMs like GPT-4 are to AI—a powerful, adaptable solution that can handle complexity and uncertainty with grace.

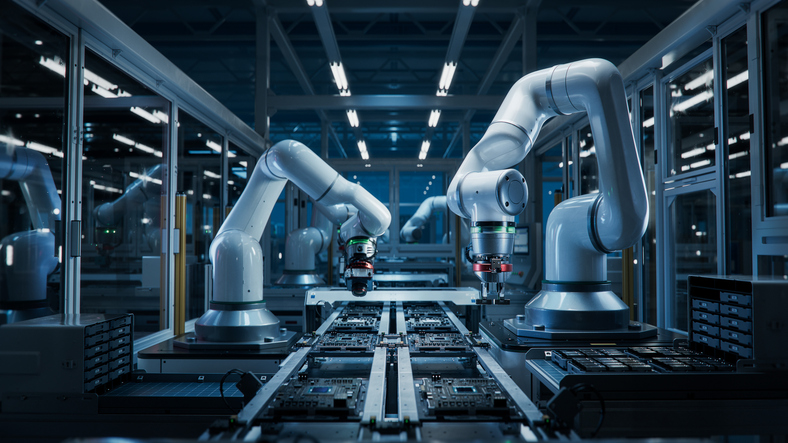

This universal model could transform industries ranging from manufacturing and logistics to healthcare and home automation. No longer would engineers have to painstakingly train each robot for specific tasks; instead, they could leverage the comprehensive knowledge embedded in an HPT-based system to tackle a wide array of challenges.

The Scaling Effect: Lessons From LLMs

The MIT team’s inspiration stems from observing the success of large-scale language models. With LLMs, performance typically improves as the model size and the diversity of the training data increase. The research team hopes that applying this principle to robotics will yield similar leaps in capability, allowing robots to function seamlessly in environments where traditional training would fail.

The scalability of HPT is what makes it particularly promising. While training larger transformers demands more resources, the benefits far outweigh the costs. The HPT model could serve as a repository of experiential knowledge, learning from simulated and real-world environments to build a comprehensive understanding that rivals human flexibility.

Challenges and Future Directions

While the potential for a universal robot brain is compelling, the path to achieving this vision is not without obstacles. One major challenge is ensuring that robots can handle the diversity of data inputs without being overwhelmed by noise or irrelevant information. Additionally, training these large-scale models demands significant computational resources, including advanced hardware and high energy consumption.

There is also the matter of data privacy and security. Just as LLMs can inadvertently store and leak sensitive information, a universal robot brain could face similar vulnerabilities. Developers will need to implement robust safety mechanisms to ensure that these intelligent systems operate ethically and securely.

Despite these challenges, the research community is optimistic. The scaling of robotic models is still in its infancy, but the lessons learned from LLMs suggest that continued investment and development could yield substantial breakthroughs. If scaling robotics training leads to similar gains as seen in natural language processing, we might witness a new era where robots transition from specialized, single-task machines to versatile, general-purpose agents.

The Broader Implications: From Labs to Real Life

The implications of HPT and similar architectures extend far beyond academia. For industries ranging from manufacturing to personal assistance, the ability to deploy robots that can adapt on the fly could lower costs, increase efficiency, and open up new possibilities for automation. This could lead to robots that handle more complex supply chain logistics, assist with precision agriculture, or provide more personalized elder care.

Healthcare could particularly benefit from adaptive robots. Imagine an operating room assistant robot that can respond to surgeons' commands, adapt to changes in the environment, and assist in different types of procedures with minimal recalibration. Similarly, robots in the service industry could enhance customer experiences by being more responsive and engaging.

Education and accessibility are also promising areas for development. A universal brain capable of contextual learning could aid in building robots that teach or assist students, especially those with learning disabilities, adapting their methods to cater to different learning styles.

Disaster response is yet another area that could see significant advancements. Equipped with an HPT-based universal brain, rescue robots could better navigate debris, analyze structural stability, and adapt to unforeseen hazards—all critical for saving lives in crisis situations.

Related: OpenAI Faces Product Delays Due to Compute Capacity Challenges

MIT’s Broader Vision for AI and Robotics

MIT's commitment to advancing both theoretical and applied research in AI and robotics is a driving force behind these developments. The institution’s interdisciplinary focus—combining engineering, computer science, and cognitive sciences—fosters an environment where bold ideas can become reality.

The HPT architecture reflects MIT’s strategic approach: learning from the successes of other fields and applying those lessons to tackle unique challenges. MIT envisions a future where AI seamlessly integrates with human life, enhancing productivity and solving some of the most pressing global challenges.

The institute is not just looking to develop smarter robots; it’s also invested in the ethical implications of AI and robotics. From bias mitigation to ensuring that AI systems benefit all of society, MIT remains at the forefront of fostering responsible innovation.

Scaling Into a New Era

MIT's foray into scaling data for robot training marks a pivotal shift in how the world views robotics. By learning from the principles that have catapulted LLMs into prominence, the HPT architecture could be the key to unlocking truly versatile robotic intelligence. While still in the early stages, the potential of such technology points to a future where robots can understand, learn, and act in an ever-changing world.

This leap forward is more than just an academic achievement—it’s a harbinger of a new age in which machines think, learn, and adapt like never before. As researchers continue to refine these models, the dream of a universal, adaptable robot brain inches closer to reality, promising to change not just how we interact with technology, but how technology interacts with us. The future of robotics is not just about mimicking human action—it’s about creating machines that thrive in complexity, embodying adaptability, and pushing the boundaries of possibility.